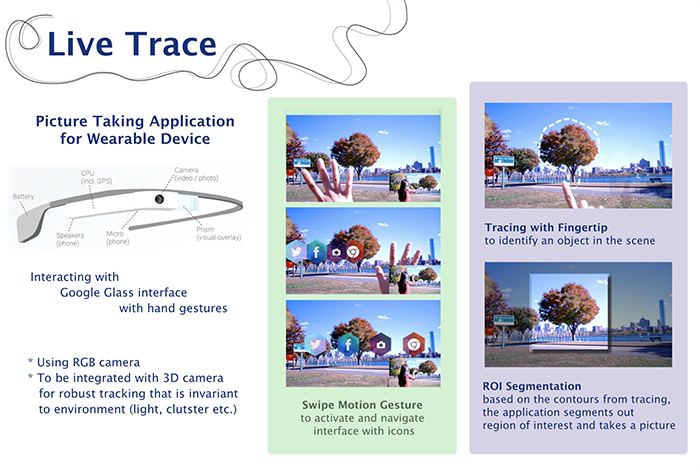

Live Trace

In this interactive experience we are interested in enabling quick input actions to Google Glass. The application allows users to trace an object or region of interest in their live view. We use the trace as the foundation for allowing the user to indicate interest in a visual region. Once selected, the user can choose to apply filters to the region, annotate the selection through speech input, or capture text through optical character recognition. These selection and processing tools could naturally integrate with quick note-taking applications where limited touchpad input precludes such input. The Live Trace app demonstrates the effectiveness of gestural control for head-mounted displays.

*This project is a part of the Mime project.

Hye Soo Yang, Andrea Colaço, and Chris Schmandt

Live Trace Outdoors Demonstration

Live Trace Demonstration with Speech Input & Live Card

Live Trace Demonstration with Optical Character Recognition